Deployment architectures

VoiceXML Connector deploys in various configurations mainly distinguished as self-hosted (deployed by you) or Nuance-hosted (deployed as cloud services).

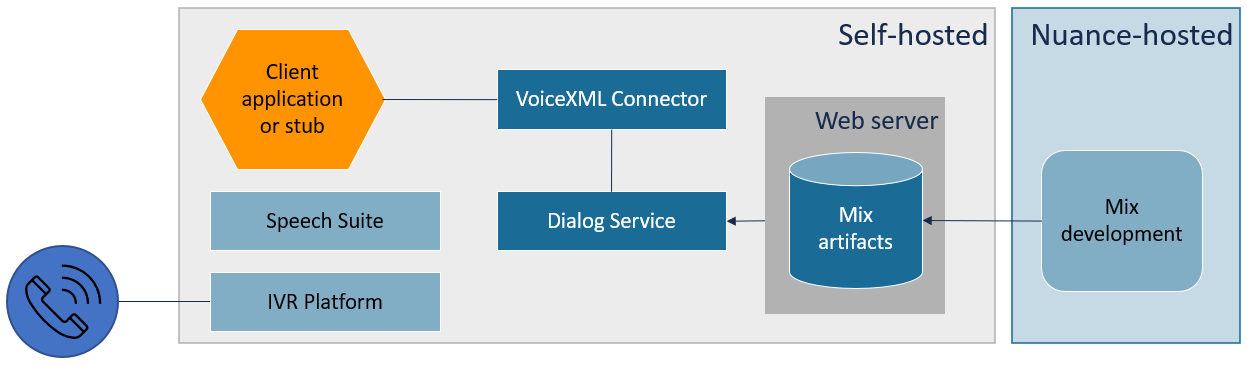

Self-hosted deployment

VoiceXML Connector resides in your network with your existing Speech Suite installation. You install the Connector as a cloud native application (loading the Connector as a Docker image, and deploying with Helm on a Kubernetes cluter) or as a Java servlet (installing as a war file on Tomcat).

In a typical deployment, you install all self-hosted components in the diagram:

High-level deployment procedure

- Decide to deploy in a Kubernetes cluster or as a Tomcat webapp.

- Install prerequisites on a network that includes Speech Suite on an IVR platform.

- Install the Dialog service or configure a connection to a Nuance-hosted Dialog service. If you self-host the service, you must install in a Kubernetes cluster (and not as a Tomcat webapp), and you'll need a web server for Mix dialog artifacts.

- Deploy VoiceXML Connector. The system is ready for application connections. See Workflow for development and runtime.

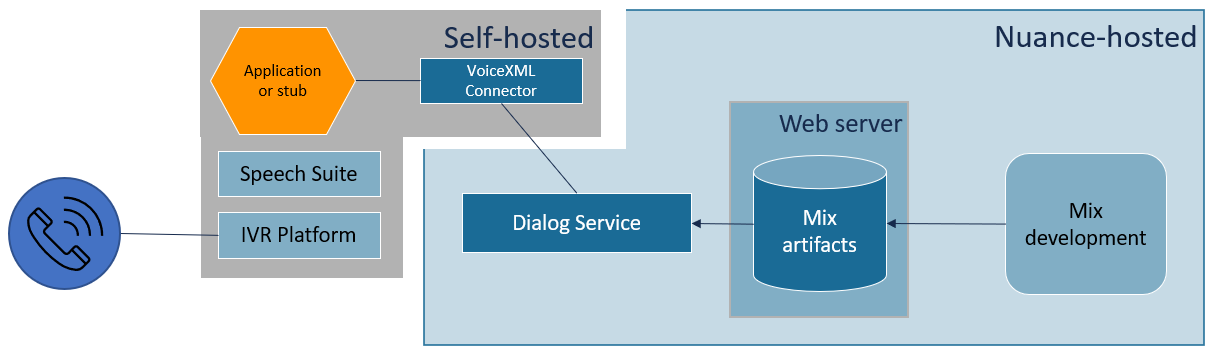

Self-hosted deployment with a Nuance-hosted Dialog service

You can combine a self-hosted deployment with a Nuance-hosted Dialog service (DLGaaS).

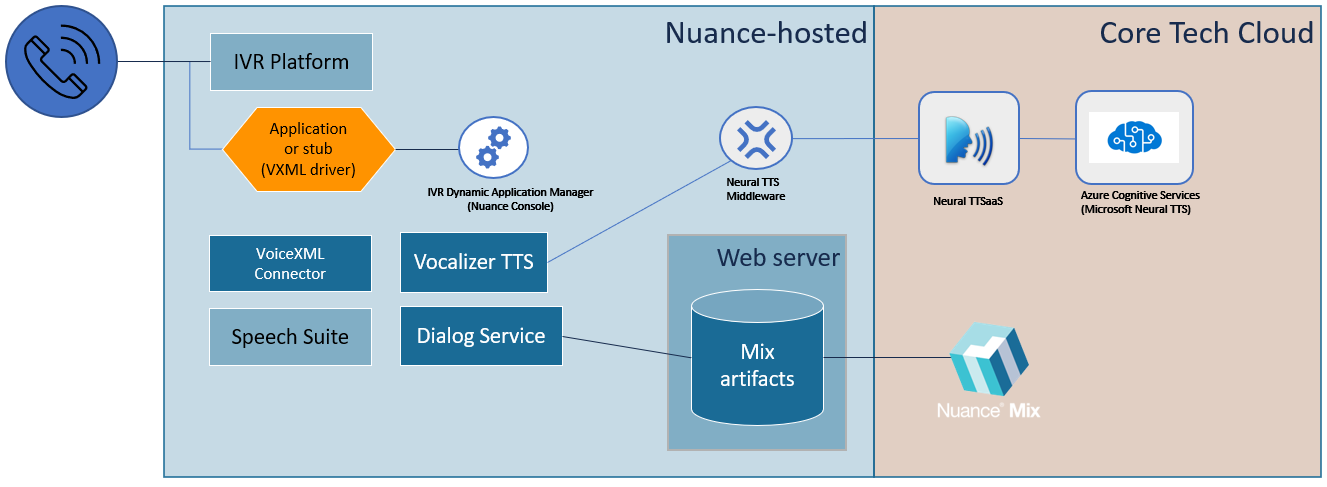

Nuance-hosted deployment

When Nuance hosts the deployment, VoiceXML Connector resides in the cloud along with Speech Suite and other runtime components. Nuance manages installation, configuration, and operations. You're responsible for the application or stub and the configuration of each start request.

In addition, Nuance-hosted deployments on Azure have features such as neural text-to-speech that are not available in the self-hosted environment:

The product design gives you DIY capabilities. Using the IVR Dynamic Application Manager (Nuance Console) you feed settings into your stub application (the VXML driver). Using Mix, you supply settings to bring neural TTS to your Nuance-hosted IVR.

Neural TTS is hosted outside of the Speech Suite platform. If neural TTS engines are out of service for any reason, your Speech Suite applications automatically use Vocalizer as a fallback.

In special cases, your application might need specific neural TTS resources. (For example, TTS that is tuned for a rare language and dialect.) In these cases, Nuance provides a URL for the application to specify in the session Start request.

Fallback. If neural TTS engines are out of service for any reason, your Speech Suite applications automatically use Vocalizer.

Workflow for development and runtime

The development and runtime workflow is almost identical for self-host and Nuance-hosted environments.

- Build Mix dialog models with the Nuance Mix dashboard, and deploy them to the artifact server.

Note: When you create a Mix project, the versions of all dialog model components must be compatible with the your versions of the Dialog service, VoiceXML Connector, and Speech Suite. For details see Integrating with Speech Suite in the Nuance Mix documentation.

- Download the Mix ASR and NLU models and deploy them on an application file server. You can also store any needed recognition grammars and audio files on that server.

- Invoke the Mix dialog models via a <subdialog> in your client application.

- Test the whole IVR application, and deploy to production. At runtime, the IVR platform receives incoming calls and executes the client application on Speech Suite. The client application requests a Mix model, the Dialog service fetches it, VoiceXML Connector converts it to VoiceXML format, and finally Speech Suite and the platform execute the converted dialog.

Note: A client application is a complete application designed and implemented on your IVR platform. A stub is a minimal client application that invokes a Mix dialog model. The client application and stub are sometimes called VXML drivers because both are VoiceXML documents.

- Build and deploy Mix dialog models with the Nuance Mix dashboard. If using neural TTS, specify all needed TTS configuration including language, voice, tone, and SSML markup in the dialogs.

Note: When you create a Mix project, the versions of all dialog model components must be compatible with the your versions of the Dialog service, VoiceXML Connector, and Speech Suite. For details see Integrating with Speech Suite in the Nuance Mix documentation.

- Write a stub to start VoiceXML Connector sessions. The stub invokes Mix dialog models via <subdialog>.

- Test the whole IVR application, and deploy to production. At runtime, the IVR platform receives incoming calls and executes the client application on Speech Suite. The stub requests a Mix model, the Dialog service fetches it, VoiceXML Connector converts it to VoiceXML format, and finally Speech Suite and the platform execute the converted dialog.

Note: A client application is a complete application designed and implemented on your IVR platform. A stub is a minimal client application that invokes a Mix dialog model. The client application and stub are sometimes called VXML drivers because both are VoiceXML documents.

Next steps

Installation: Deploying with Helm, Deploying with Tomcat or Hardware and memory for self-hosted environments.

Developing a <subdialog> see Invoking VXML Connector from applications.